The Commoditization of the GPU

Al hardware eventually gets commoditized, and it's happening already with an oversupply of GPUs. How can AI service providers combat this?

Before reading this article, if you are in Kelley, please take a few minutes to fill out this survey for Dean’s Insight Board.

Today, I (Nikhil) want to spend a bit of time talking about the commoditization of a product that has been the primary driver behind the AI-led bull market that’s taken place since October of 2022 (albeit with interruptions). The graphics processing unit, which we’ve spoken about in previous articles, provides the massive parallel computing power needed to train large language models and other generative AI systems. This surge in demand for AI infrastructure has driven significant investment and valuation increases across the semiconductor, cloud, and AI industries, making GPUs the backbone of the current AI boom.

This article was inspired by another Substack article written by Eugene Cheah, which I am going to spend a few paragraphs summarizing as it’s important context to this topic. Highly recommend reading it in it’s entirety.

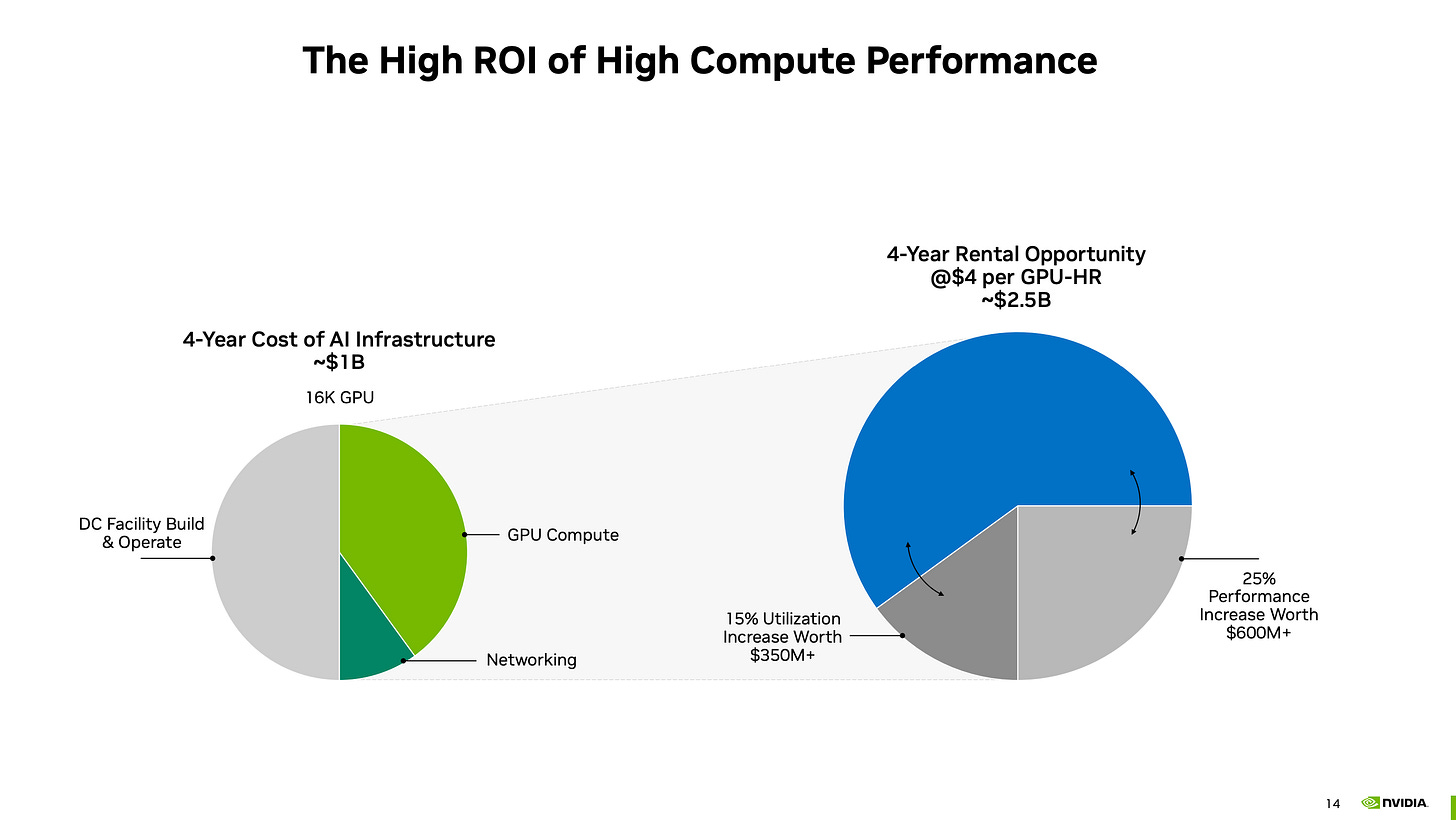

Cheah discussed the GPU rental market as a bursted bubble, noting a 40% price drop y/y for smaller clusters. Whereas Nvidia projected a $4 per GPU-hour cost over 4 years, the article notes the oversupply has led to GPU rental prices at just $1-2 an hour.

The primary culprit for the price fall, according to Cheah, is the rise of open-weight models. Much of the investment made by Microsoft and Amazon was put towards physical infrastructure to power proprietary model training. However, this assumption requires a burgeoning SMB model creator market seeking domain-specific models made from scratch.

“The arrival of GPT4 class open models (eg. 405B LLaMA3, DeepSeek-v2)” has broken the economics of this assumption by allowing this market (and many others who may have previously considered training their own) to instead fine-tune existing open-weight models. In doing so, creators require just 1-2 nodes to create their model instead of 16+ if they were making it from scratch. With GPU rental costs on the way down, the economics obviously tilt towards open source.

How does this actually play out in the numbers? The article does a great job of compiling financial metrics which, for the purposes of this article, I will accept as fact. The first graph below is the economics of short-term (“on demand”) H100 leasing.

At $4.50/hour, even when blended, we get to see the original pitch for data center providers from NVIDIA, where they practically print money after 2 years. Giving an IRR (Internal rate of return) of 20+%.

However, at $2.85/hour, this is where it starts to be barely above 10% IRR.

Meaning, if you are buying a new H100 server today, and if the market price is less than $2.85/hour, you can barely beat the market, assuming 100% allocation (which is an unreasonable assumption). Anything, below that price, and you're better off with the stock market, instead of a H100 infrastructure company, as an investment.

And if the price falls below $1.65/hour, you are doomed to make losses on the H100 over the 5 years, as an infra provider. Especially, if you just bought the nodes and cluster this year.

And then you have your long-term reservations, which would be undertaken by an Anthropic or OpenAI to ensure they have access to compute power that would allow them

So open source has, in many ways, led to the commoditization of this technology. But what is commoditization? Commoditization occurs when the latest innovations lose their luster and become a part of expected daily life. The result of this process is dropping prices and a rise in competition among those who provide the service or goods (sourced from Ges Repair).

Resulting Trend: Compressed Margins, Increased Opportunity for Value-Added Service Revenue in AI Marketplaces (GPUaaS)

This space primarily consists of companies that act as intermediaries for AI resources. I’ve identified hardware marketplaces and model marketplaces as the two primary opportunities garnering the most investment.

Example use case of AI Marketplace (model marketplaces): Medical imaging diagnostics

Imagine you are a medtech company looking to improve diagnostic capabilities, using AI within the medical imaging process to detect early-stage signs of tumors or cysts. It’s a challenging model to build from scratch so, as we’ve discussed, we will instead use a pre-trained open weight model. However, we can take this one step further to use a pre-trained model from an AI model marketplace (see here for how HuggingFace is revolutionizing this) that specializes in healthcare.

We save on the time and expenses of training a model from scratch, while the marketplace offers fine-tuning options to customize the model with their own patient data. You might be thinking that’s a HIPAA violation, but in a rare showing of AI getting ahead of regulations, marketplaces have already identified this as a threat and built models that comply with HIPAA and existing healthcare regulations.

For GPUaaS providers, the commoditization of the GPU will intensify competition, leading to margin pressure. In addition to being able to reliably distribute computing power (A100/H100 instances), price will become an increasing factor as the technology democratizes to smaller companies looking to interact with their currently unstructured data. Currently, CoreWeave and LambdaLabs, both emphasizing low-latency deployment are racing to undercut the hyperscaler cloud platforms. Noted in Data Center Dynamics article earlier this month

Beyond simplicity and speed, another factor that works in the company's favor is cost. AWS, with huge historical margins to defend, can charge more than $12 for an on-demand H100 GPU per hour. Lambda currently charges $2.49 - although a price only comparison leaves it behind CoreWeave, which manages $2.23.

The challenge for Lambda, and others in its situation, is less about attracting developers with its ease and price. It's about keeping them if they grow. Cloud provider DigitalOcean focused on software developers in the pre-AI era, but saw customers 'graduate' to other cloud providers as they reached scale - leaving DO's growth stagnant.

The last point there is crucial because these companies have to (1) meet margin targets that satisfy investors, (2) maintain competitive pricing that keeps people from migrating to competitors but (3) have a platform that’s scalable enough where growing companies don’t feel the need (or delay the need) to migrate to the hyperscaler platforms. That’s a tough tightrope.

One way to do this is through value-added revenue services. CoreWeave already offers a "One-Click Model” service, allowing users to deploy an open source ML model like GPT-J. But as model needs become more domain specific, service providers need to expand the model selection to include a larger library of pre-trained models, similar to the catalog Hugging Face offers. Through this, you’re able to combat the margin pressure from commoditized hardware by integrating recommendation models that can then be deployed on GPUaaS provider systems for a specific amount of GPU time. In fact, Digital Ocean (stock chart above) announced last week a partnership with HF to, in their words, solve the following problems…

1-click deployable models: Deploy popular AI models like Llama 3 by Meta and Mistral with just one click on GPU Droplets powered by NVIDIA H100s accelerated computing.

Simplified Setup: Utilizing 1-Click Models on DigitalOcean removes infrastructure complexities, allowing you to focus on building with model endpoints immediately without the need for complex setup or software configurations.

Optimized Performance: 1-Click Models are end-to-end optimized to run on high-performance GPU Droplets, ensuring models run on cutting-edge DigitalOcean hardware with minimal overhead.

A few different pricing strategies are achievable with this…

Freemium: Everybody’s favorite. Free access to the core stuff, but of course you give them limited usage hours and crappy GPUs so it’s not really free. That way, if the customer wants access to better GPUs (e.g. H100 or A100) and advanced HF models, you can start pricing these at $2.50 to $4.00 per hour. This could be structured in a tiered-pricing model, getting us back into that economical range for on-demand leasing.

Pay per model: A bit more risky for HF, because they risk diluting the value of their catalog if only a few models are getting high usage, but advanced models could have a $50-$100 deployment fee per user that allows both companies to monetize the value of the model being integrated with the deployment platform. Post-deployment, you’d still have a standard hourly pricing model that could be upsold on the fact that you have access to HF’s best models

Bundled subscription plans with HF Premium: If any of you have tried downloading stock price data from Yahoo Finance recently, you’ve encountered this (see image below). Subscription bundles can have a starter plan, a pro plan, an expert plan, an Einstein plan, and however many bundles a Digital Ocean feels appropriate.

I personally think GPUaaS should be trying to implement this pricing model as fast as possible. Bundled pricing gives users a clear, predictable cost structure that could be appealing for smaller companies seeking to avoid unexpected expenses from separately priced components. For the sellers, bundles create a stickier service by integrating multiple components that the user needs that, as with software can be upsold to drive ARPU. There will be a recurring need because AI models will continuously require updates to adapt to new data or changing business goals.

Examples of this include the financial services system needing to adapt to changing risk tolerances baed on regulations, or dynamic recommendation systems for e-commerce

Additionally, as inference workloads intensify, consistent GPU availability will be necessary, and not everyone will be able to afford it from the hyperscalers. Although the trend of falling rental prices lends itself to the on-demand model, the stickiness of an integrated application with model libraries could be structured into longer term contracts that provide better revenue predictability.