Minutes From A Meeting With A Memory Maestro

Viraj Lakhotia and I (Nikhil) recently held a meeting with an advanced memory expert to hash out an investment thesis on the memory space

Is memory commoditized? Right now, Nvidia gets supplied from SK Hynix and Micron, but Samsung is the announcement that’s being waited on. If Samsung gets approval, what changes?

Memory is commoditized, and it has been for decades. The global memory market is dominated by SK Hynix, Micron and Samsung, who control 75% of supply in an inherently cyclical industry. These players act reactively to each other to balance supply-demand dynamics. Examples provided to us by the expert include…

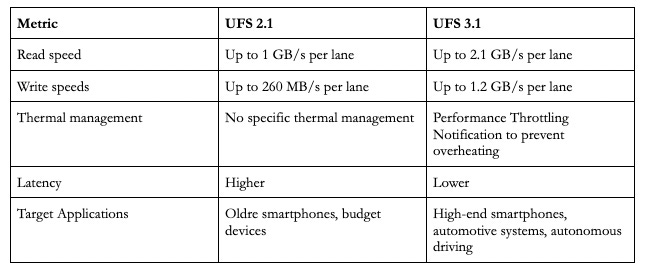

If one player says they’re going to support universal flash storage (UFS) 2.1 for the next two years, the other two will move in lockstep

UFS is a storage standard for smartphones and tablets. The differences between UFS 2.1 and UFS 3.1 can be seen below. Generally speaking, if a memory player was to support UFS 2.1 for an extended period of time, I’d imagine this would realize price synergies - by not oversupplying the newer technology in 3.1, prices can remain high, allowing UFS 2.1 to remain profitable.

The introduction of HBM has worsened the commoditization issue. There has been a transition in focusing to higher bit densities (e.g. quad-level cell) and increasing layer counts in the NAND space, and the expert believes this parallels the trends in HBM, where the push for greater performance and density is coming at the expense of differentiation.

Why is performance ≠ differentiation?

Performance improvements in memory (e.g. higher HBM bandwidth or layer counts) are often incremental and matched by competitors. This is why a Nvidia can source its memory from all three suppliers and achieve roughly the same results. The differentiation I’m referring to has nothing to do with performance related measures, but rather the thermal solutions each company can come up with to address the power and heat dissipation issues plaguing their end customers. Reliability and endurance for continuous operations (more on that below) is also an element of differentiation manufacturers are seeking to identify.

It’ll be interesting to see if the thermal faults of the Blackwell 72-chip server rack will force Nvidia to take thermal concerns as a bigger input into their production process, and further what kind of pressure this will place on the HBM manufacturers.

Both NAND and HBM manufacturers are focused on increasing storage and processing efficiency within constrained physical and thermal limits.

Thermal is the big constraining factor (as we’ve spoken about below) due to the end of Dennard scaling, which took place around 2005.

Why are manufacturers so hyperfocused on this issue? AI and Memory Wall, a great paper written by professors at Berkeley, shows that compute scaling has drastically outpaced memory bandwidth scaling, hence the urgency.

The availability of unprecedented unsupervised training data, along with neural scaling laws, has resulted in an unprecedented surge in model size and compute requirements for serving/training LLMs. However, the main performance bottleneck is increasingly shifting to memory bandwidth. Over the past 20 years, peak server hardware FLOPS has been scaling at 3.0x/2yrs, outpacing the growth of DRAM and interconnect bandwidth, which have only scaled at 1.6 and 1.4 times every 2 years, respectively. This disparity has made memory, rather than compute, the primary bottleneck in AI applications, particularly in serving.

What are some of the restricting factors to innovation?

When you hear about limitations in terms of frequency and throughput, its less about raw capacity and more about the data transmissions at high speeds creating too much heat that the chips will melt over time. This is particularly due to continuous operation requirements, which refers to the need of memory chips in data centers and AI accelerators to be able to constantly run, unlike consumer devices which can idle.

Another challenge is that optimal performance requires that memory is closely integrated with the SoC to reduce signal loss and latency. By doing this, however, the compact design of the SoC reduces the amount of room for cooling mechanisms, bringing us back to the heat issue. Our takeaway from the conversation was that Hynix and Micron are focusing on incremental thermal improvements, while Samsung seems to be on a riskier path to reimagine thermal management, which has led to delays but could result in a longer term breakthrough.

Let’s walk through the process of how continuous operation requirements decay a chip over time

Chips running at high frequencies generate heat

Excessive heat from constant data transmission leads to thermal stress, degrading the silicon

The heat and electrical stress degrading the chip materials insulating the transistors cause leakage currents, reducing efficiency and leading to potential short circuits

As damage accumulates, chips will experience timing issues and higher power consumption

Step 4 is bad

Can you walk through some of the prodcution process differences between the biggest players?

SK Hynix

Focus: pushing speed boundaries and bandwidth capabilities

Innovation edge: Historically leads in scaling data throughput and achieving higher performance metrics, making them a go to for HPC

HBM4 approach: likely to prioritize speed and throughput improvements

Challenge: Thermal

Micron

Focus: balancing speed, durability, reliability

Innovation edge: Known for error correction, making their memory historically durable for continuous ops

HBM4 approach: Continues to balance performance and reliability but acknowledges bit-flip concerns are less critical for HBM since it operates at consistent temperatures

bit-flips occur when a memory cell accidentally flips its value from 0 to 1. will result in incorrect data being read or written, but only happens when heat generated during chip operation disrupts the stability of the memory chip

Challenge: Staying competitive on speed with SK while maintaining reliability edge

Samsung

Focus: scaling production volume and introducing new process technology

Innovation edge: strong in vertical integration and efficiency

HBM4 approach: Behind SK and Micron currently, but aiming to innovate around thermal and power issues. HBM3/e could really help them reclaim competitiveness

Challenge: Catching up in speed and thermal while maintaining cost advantage

On pricing and longevity…

Historically, Hynix has always been the lowest, with Micron slightly above and Samsung slightly above Micron. Our expert works for a U.S. based company, and noted the negotiations with Micron have more transparency due to the fact that they are a domestic company. Samsung/Hynix U.S.-based teams have to liaison with Korea, which can reduce transparency on pricing.

Hynix tends to be a lot more focused with their products, keeping SKU count low, whereas Samsung/Micron make more products requiring coordination at the fab level. Hynix doesn’t specialize beyond a certain amount of products, which kind of reminded me of the Acquired episode on Costco (might be a stretch)

Costco, in the last 10 years, was around 4500, and then they looked and said, can we bring it down? It went to 4000. Today, they're sitting at 3800. This number is still going down, not up. If you do the math and you start thinking, geez, if you're not selling a lot of SKUs, but you have a lot of customers coming through your stores, what does that mean? It means that any given item is going to turn faster. It's this magical unlock, in addition to the instantly available for sale in the warehouse thing. It is the low SKU count that directly gives you the ability to turn your inventory over quickly.

The point that keeping a low SKU count leads to quicker turnover may not apply in memory to the level it does in box retail, but the fact is that Hynix is constantly pushing the speed boundaries and are known as the best innovators. I wouldn’t be surprised if their ability to allocate less focus to inventory management has synergies with that fact.

As for longevity, Micron seems to be the leader, as they have fabs dedicated to just supporting technology. Investors seem to care very little about that at time of publishing (stock is down 16% today after reporting a weak earnings outlook last night).

Our Takeaways

My thoughts are Hynix's advantage on speed and price gives them the best leg up as of current, while Samsung is a deeper value play if they can figure out the thermal issues. The problem with Samsung lies less with memory and more with the rest of the business, where exposure to a market-share-losing fab business should weigh on investor minds in the near-term given horrendous (reported) yields. Micron seems to have the cleanest HBM model with a premium warranted for having better integrated domestic operations, but their outsized exposure to the flailing PC market likely justifies today's correction. One thing to note: as hyperscalers progress further in building their own chips, it would likely make more sense to work with a domestic operator Micron due to the longevity advantage noted above.